AI adoption generates risk at unprecedented speed and scale. Constrain that risk with Imbrulo Private AI solutions.

Private AI

Deployed on Edge or Private cloud, subject to your governance.

Trusted by professionals in:

Financial Services

Legal Practices

Healthcare

Government

Manufacturing

Research & Design

and other sensitive industries.

AI Is Moving Faster Than Policy, Controls, and Risk Teams

AI development speed is breathtaking

AI capabilities are evolving faster than most organizations can govern, and adoption is outpacing security and compliance oversight. As teams use public AI tools for real work, sensitive data can cross into third-party systems and outputs can be difficult to audit or explain.

AI concentrates risk quietly

Small missteps and invisible data disclosures accumulate across thousands of interactions and automated workflows. When deferred errors surface through an audit, breach, lawsuit, or public incident and cascade instantly into outsized, enterprise-wide damage.

Public AI Risk Factors

Mainstream AI from providers like OpenAI, Google, Microsoft, and Anthropic runs outside your environment via external services and APIs.

When sharing sensitive data with these services the business risk may be much greater than you realize.

Public AI turns sensitive work into third-party data exposure.

Prompts, uploads, and context can leave your environment, crossing vendors, sub-processors, and unknown retention paths.

You can’t govern what you can’t see.

Public AI is a black box: limited logging, limited auditability, and limited control over where data goes and how long it persists.

Confidentiality and legal privilege are fragile.

A single paste of client data, contract terms, or proprietary data can create irreversible confidentiality and compliance fallout.

Compliance doesn’t tolerate ambiguity.

Data residency, retention, access control, and audit requirements can’t be “best effort” when regulators ask for proof.

Vendor drift becomes business drift.

Model updates, policy changes, and pricing shifts can change outputs and disrupt critical workflows overnight.

Shadow AI becomes a parallel system of record.

Teams start operating inside chat tools, moving knowledge and decisions outside approved governance and security controls.

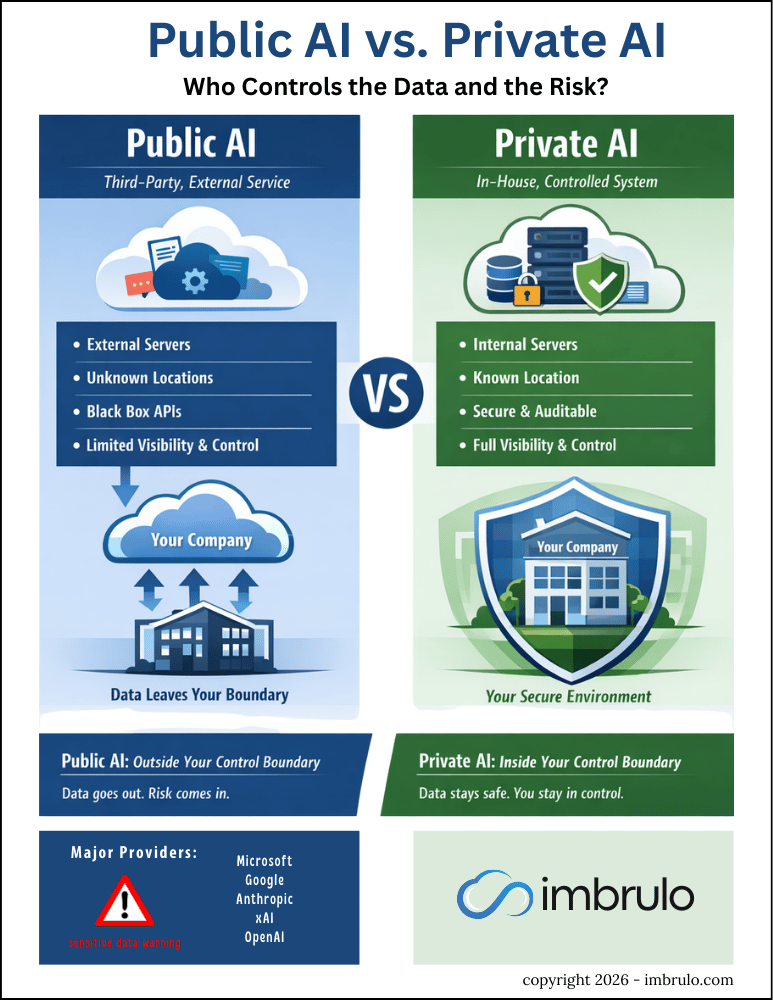

Public AI vs. Private AI

Who Controls Your Data and Manages the Risk?

Data Control Boundaries

Public AI operates outside your control boundary—sending prompts and documents to third-party, black-box services with limited visibility and governance.

Private AI keeps data and model execution inside your environment, enabling enforceable security, auditability, and policy control.

AI Provider Landscape

Most mainstream “public AI” offerings from providers like OpenAI, Google, Microsoft, and Anthropic are consumed as external, API-delivered services where prompts and context are processed outside your environment. Even when wrapped in enterprise plans, the underlying model runtime remains provider-operated, so governance depends on their controls.